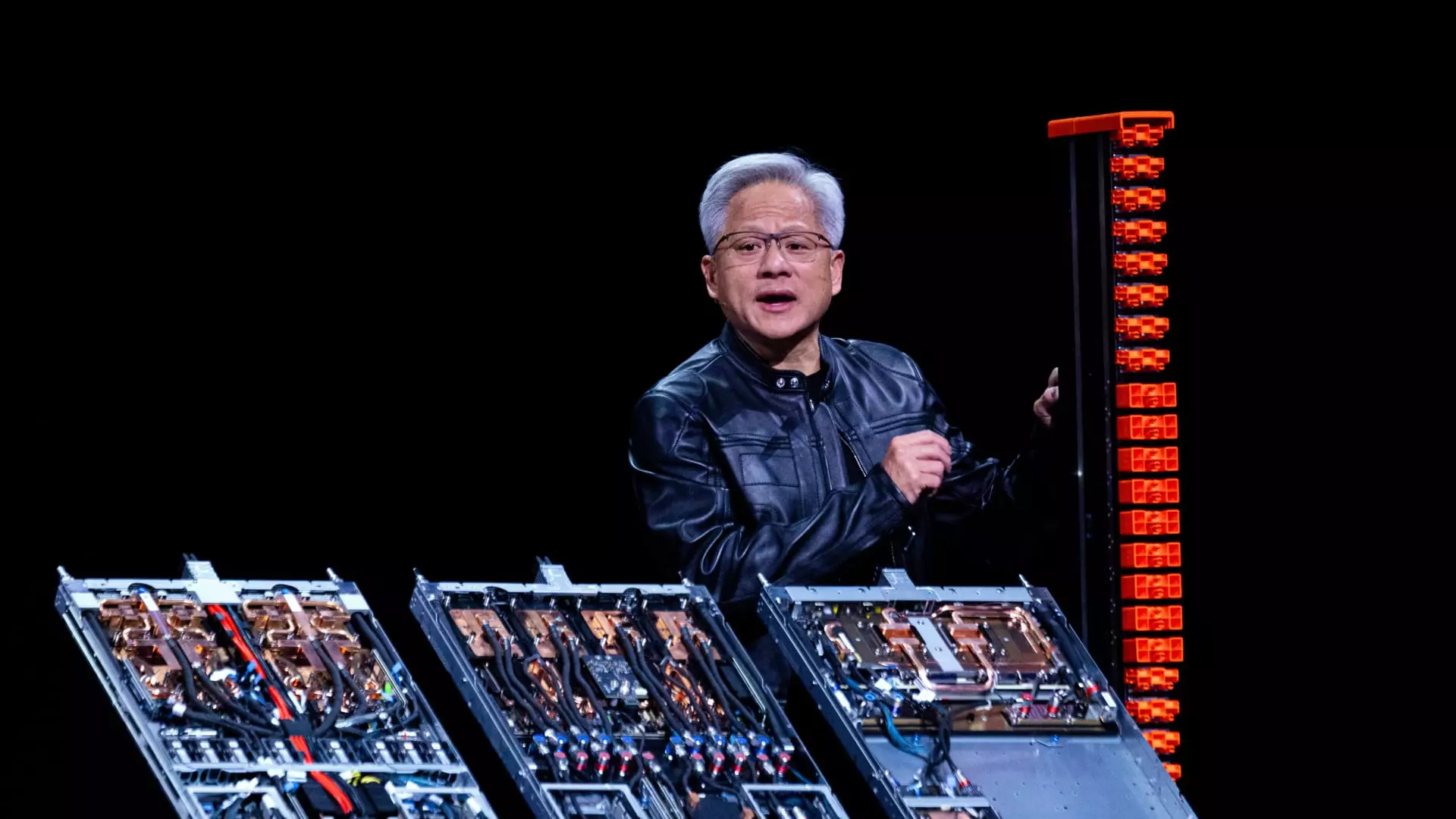

In an electrifying announcement, Jensen Huang, CEO of Nvidia, has set the stages ablaze with the introduction of the revolutionary “NVLink Fusion” program. This pivotal initiative is a seismic shift in how artificial intelligence infrastructures are designed. Historically, Nvidia’s NVLink technology limited its utilities to Nvidia components alone, creating a sort of walled garden that safeguarded its market dominance. However, breaking free from this restrictive model, NVLink Fusion aims to harmonize Nvidia’s GPUs and CPUs with those from competitors, forging a collaborative ecosystem that promises to foster innovation.

Huang’s vision thrives on the promise of a more integrated AI landscape, where semi-custom infrastructures can flourish. By unlocking the doors to non-Nvidia central processing units (CPUs) and application-specific integrated circuits (ASICs), NVLink Fusion positions Nvidia not just as a chipmaker but as a strategic enabler of AI systems that are adaptable and bespoke. In essence, this is Nvidia stepping out of its comfort zone, and quite frankly, it is a welcome move.

Embracing Collaboration Over Isolation

The announcement resonates deeply in a landscape often defined by cutthroat competition. With partners like MediaTek and Qualcomm Technologies joining hands under NVLink Fusion, Nvidia is signaling a shift from traditional market rivalries toward a more collaborative approach. This endeavor solidifies Nvidia’s central role in the next generation of AI development—both as a supplier and as a framework for others to build upon. The sentiment is clear: by allying with various industry players, Nvidia aims to become the nexus of AI infrastructures, emphasizing the power of collaboration rather than isolation.

Financially speaking, this could be a double-edged sword. While potentially lowering demand for its own CPUs, Nvidia’s strategic move to enable third-party CPUs may tap into an expansive pool of data centers seeking flexibility. Ultimately, the gamble is steep; the payoff could be enormous if NVLink Fusion’s innovative model catches on, turning Nvidia into the indispensable backbone of AI-centric technological advancements.

Responses from Industry Analysts: Optimism with Cautious Underpinnings

Industry analysts are buzzing with opinions, and the reactions reflect both excitement and skepticism. Ray Wang, a semiconductor and technology analyst, highlights that NVLink Fusion represents Nvidia’s tactical shift to absorb a larger slice of the lucrative data center market, which is witness to an influx of competition from custom processors being developed by tech giants like Google and Microsoft. The underlying tension is palpable—Nvidia is in a race not only for market share but for the very definition of AI infrastructure.

Yet, some analysts voice concerns regarding the long-term implications of such integrations. Rolf Bulk warns of the risk involved: personalization comes at a cost, and the demand for Nvidia’s proprietary components may wane in favor of alternatives. It’s a precarious position, amplifying the need for a delicate balance between innovation and self-preservation.

The Competitive Landscape: Nvidia vs. The Titans

While Nvidia has historically held court as the preeminent force in general-purpose AI training, the presence—or rather absence—of competitors like Broadcom, AMD, and Intel from the NVLink Fusion ecosystem raises eyebrows. This absence is telling; it reflects the delicate balance of power in a rapidly advancing industry where innovation is both a boon and a battleground. However, Nvidia’s proactive initiative demonstrates an understanding that the nature of competition is evolving.

With competitors increasingly designing custom components specific to their needs, Nvidia’s holistic approach through NVLink Fusion could be its lifeline. The agility it promises might just trump those traditional competitors who cling to proprietary methodologies instead of collaborating on initiatives that define the future.

A Bold Leap from Tradition

Huang’s bold proclamations during the Computex 2025 conference in Taiwan underscore a transition that has been long overdue. The launch of products like the “GB300” systems and the “DGX Cloud Lepton”—a compute marketplace connecting developers with extensive GPU resources—further solidifies Nvidia’s bid to not merely maintain its status quo but to re-energize it with a dynamic flair.

It’s an invigorating time for AI enthusiasts and developers alike. As Nvidia strives to redefine how we conceptualize AI infrastructure, it sets a precedent for the tech industry at large, encouraging a paradigm where progress is intertwined with partnership. The ripple effects of these developments will likely reverberate through the industry, reshaping not just what AI can do but how it will be integrated into the very fabric of technological evolution.

Leave a Reply